Sometimes I wonder whether I have learned anything since I was a student. I usually conclude “a few things.” Recently, thinking about RNA-seq data sets involving >20,000 counts, I remembered the arrival of generalized linear models (GLMs) and generalized linear interactive modelling (GLIM), and how dramatically they changed my professional life.

As a student I had learned that when analysing “non-normal” quantities such as binary data, counts or proportions, there were a couple of options: transform and use a standard linear model, or follow Fisher (or Berkson) and do a probit (logit) analysis. Elsewhere in my course I learned about exponential families, but that was theoretical, not applied statistics. We all knew that the binomial, Poisson and normal distributions formed exponential families, and had sufficient statistics, but this wasn’t seen as having any implications for the analysis of “real” data. Nelder and Wedderburn’s 1972 JRSS A paper introducing GLMs changed all this for me, and I think for many others. It was a synthesis, uniting several existing models and methods under a novel framework, and suggesting new ones. At that time, the toolbox of the applied statistician seemed to be an ad hoc collection of disconnected tricks that worked with data, and this was immediately appealing as something new, useful and intellectually satisfying. Also novel was the role of the iteratively reweighted least squares algorithm for fitting GLMs. I had learned the usual variants on Newton’s method, and Fisher scoring for maximizing likelihoods, but had never before viewed an algorithm as a central part of statistical thinking. In his 2003 Statistical Science interview with Stephen Senn, Nelder suggested that the original idea for GLMs came from his knowledge of “a set of models which had a single algorithm for their fitting,” and that “Wedderburn’s knowledge about the exponential families” then came in to complete the synthesis. Here was one of Britain’s leading statisticians admitting that he was not familiar with the basics of exponential families. But perhaps even worse, “a rather eminent statistical journal to which [the paper] was submitted first, turned it down flat without any opportunity to resubmit.” Nothing really new, not enough theorems, no asymptotics. This would not happen these days, would it? (Just joking.)

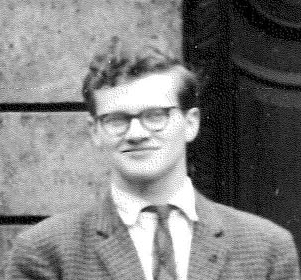

In the period immediately following the publication of the 1972 paper, there were a number of extensions of GLMs, including ones with constraints, and the very valuable notion of quasi-likelihood, both due to Wedderburn. This was just the beginning of what one might term the GLM industry: efforts to exploit the synthesis, to cover more and more of the routine tasks we need to carry out. At that time I was working in Perth, Australia, where our (mathematics) department had ample funds for visitors, and I formed the idea of inviting Robert Wedderburn to visit Perth to tell us about GLMs. Upon inquiring I was surprised and deeply saddened to learn that not long before he had died of anaphylactic shock from an insect bite while on a holiday.

Two years after the paper was published, the GLIM software was released, a product of the Royal Statistical Society’s Working Party on Statistical Computing that was initially chaired by Nelder. I don’t remember exactly when we got our hands on it in Perth, but it was relatively early on, due to the close relationship between statisticians in the CSIRO and those at Rothamsted. This was revolutionary for me. I’d made very limited use of statistical computing until then. I found the business of submitting blocks of punched cards to the computer centre tedious, as I was never careful enough to get it right first time. With GLIM, I could pretend that I was a computer whiz, and I did. The original GLM paper had a section entitled “The Models In The Teaching Of Statistics,” and I agreed wholeheartedly with what the authors said there. GLIM gave us the ability to put those fine sentiments into practice. For a short time I embraced GLMs and GLIM with all the zeal of new convert. I even gave a couple of short courses about them. I particularly liked the fact that the theory of GLMs could be summarized on a single page. Equipped with this single page and a version of GLIM installed on their computer, people with theoretical backgrounds in statistics could experience the joys of applying statistics in a one-day course, starting from scratch. I saw several do so.

GLMs now have a firm place in the statistical canon. The shift from microarray to sequence data for gene expression occurred about six years ago, and statistically, this involved a shift from normal linear models to generalized linear models. We are deeply indebted to Nelder and Wedderburn’s insights and industry for providing the tools we need to analyse these important data.

Robert Wedderburn, pictured at Cambridge Statistical Laboratory in 1969. Wedderburn’s life was tragically cut short when he died of a bee sting, aged 28. Photo courtesy of Cambridge Statistical Laboratory, with thanks to Julia Blackwell.

Comments on “Terence’s Stuff: GLMs and GLIM”