Not long ago I helped some people with the statistical analysis of their data. The approach I suggested worked reasonably well, somewhat better than the previously published approaches for dealing with that kind of data, and they were happy. But when they came to write it up, they wanted to describe our approach as principled, and I strongly objected. Why? Who doesn’t like to be seen as principled?

I have several reasons for disliking this adjective, and not wanting to see it used to describe anything I do. My principal reason for feeling this way is that such statements carry the implication, typically implicit, but at times explicit, that any other approach to the task is unprincipled. I’ve had to grin and bear this slur on my integrity many times in the writings of Bayesians. Not atypical is the following statement about probability theory in an article about Bayesian inference: that it “furnishes us with a principled and consistent framework for meaningful reasoning in the presence of uncertainty.” Not a Bayesian? Then your reasoning is likely to be unprincipled, inconsistent, and meaningless. Calling something one does “principled” makes me think of Hamlet’s mother Queen Gertrude’s comment, “The lady doth protest too much, methinks.”

If a statistical analysis is clearly shown to be effective at answering the questions of interest, it gains nothing from being described as principled. And if it hasn’t been shown so, fine words are a poor substitute. In the write-up mentioned at the beginning of this piece, we compared different analyses, and so had no need to tell the reader that we were principled: our approach was shown to be effective.

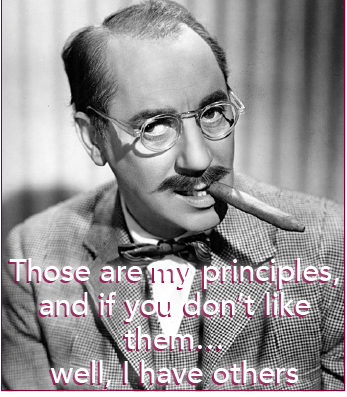

Of course there is the possibility that multiple approaches to the same problem are principled, and they just adhere to different principles. Indeed, one of the ironies in the fact that my collaborators want to describe our approach to the analysis of their data as principled, is that a Bayesian approach is one of the alternatives. And as we have seen, all Bayesian analyses are principled. The reason my collaborators wanted to describe what we did as principled, was to distinguish our approach from the non-statistical alternatives. To them, all statistical methods are principled. Groucho Marx said: “Those are my principles, and if you don’t like them… well, I have others.”

I have another reason for feeling ambivalent about principles in statistics. Many years ago, people spent time debating philosophies of statistical inference; some still do. I got absorbed in it for a period in the 1970s. At that time, there was much discussion about the Sufficiency Principle and the Conditionality Principle (each coming in strong and weak versions), the Ancillarity Principle, not to mention the Weak Repeated Sampling Principle, the Invariance (Equivariance) Principle, and others, and the famous Likelihood Principle. There were examples, counter-examples, and theorems of the form “Principle A & Principle B implies Principle C”.

You might think that with so many principles of statistical inference, we’d always know what to do with the next set of data that walks in the door. But this is not so. The principles just mentioned all take as their starting point a statistical model, sometimes from a very restricted class of parametric models. Principles telling you how to get from the data and questions of interest to that point were prominent by their absence, and still are. Probability theory is little help to Bayesians when it comes to deciding on an initial probability model. Perhaps this is reasonable, as there is a difference between the philosophy of statistical inference and the art of making statistical inferences in a given context. We have lots of principles to guide us for dealing with the easy part of our analysis, but none for the hard part.

While the younger me spent time on all those Principles over 40 years ago, I wouldn’t teach them today, or even recommend the discussions as worth reading. But I do think there is a demand for the principles of what I’ll call initial data analysis, an encapsulation of the knowledge and experience of statisticians in dealing with the early part of an analysis, before we fix on a model or class of models. I am often asked by non-statisticians engaged in data science how they can learn applied statistics, and I don’t have a long list of places to send them. Whether what they need can be expressed in principles is not clear, but I think it’s worth trying. My first step in this direction was taken 16 years ago, when I posted two “Hints & Prejudices” on our microarray analysis web site, namely “Always log” and “Avoid assuming normality”. I am not against principles, but I like to remember Oscar Wilde’s aphorism: “Lean on principles, one day they’ll end up giving way.”

2 comments on “Terence’s Stuff: Principles”