Many of us were brought up to think that we cannot do a statistical analysis without making assumptions. We were taught to pay attention to assumptions, to consider whether or not they are approximately satisfied with our data, and to reflect on the likely impact of a violation of our assumptions. Our assumptions might concern independence, identical distribution, normality, additivity, linearity or equality of variances. (Needless to say, we have some tests for all these assumptions.)

I see much of this concern with assumptions as coming from the desire to reduce statistics to something close to mathematics. A typical mathematical theorem starts with hypotheses (e.g. axioms), and proceeds through an accepted process of reasoning, to a conclusion. If your hypotheses are true, and your reasoning is correct, then your conclusions will be true. The statistical version of this is that we have data and a question about the real world. We take a statistical model involving statistical assumptions, we make use of it and some statistical methods with our data, and we draw statistical conclusions about the real world. Our hope is that if our statistical assumptions are true, and our statistical methods are correctly applied, then our statistical conclusions about the real world will be true.

This analogy between statistical and mathematical reasoning is flawed, and not just because statistics involves uncertainty. Mathematical truth and real world truth are very different things. Data cannot always be understood by making statistical assumptions; sometime we need to look at it and think hard about it in its context. Only rarely are all our assumptions testable, and often some will be implicit—we don’t even notice we are making them. J.W. Tukey has highlighted one: a belief in omnicompetence, the ability of a methodology to handle any situation. Also, seeking real world truth with (near) certainty is just one goal; there are many other performance metrics for statistical analyses. Most of us will be familiar with the effective use of a statistical model or method, even though the standard assumptions that go with it are transparently violated. This would just be a paraphrase of George E.P. Box’s aphorism on models and usefulness. Assumptions don’t always matter. In this context, Tukey strikingly observed that, “In practice, methodologies have no assumptions and deliver no certainties.” He goes on to say, “One has to take this statement as a whole. So long as one does not ask for certainties one can be carefully imprecise about assumptions.”

But sometimes assumptions really do matter. Many of you will know Nate Silver’s The Signal and the Noise, in which he discusses the global financial crisis, among other topics. He explains how the financial services company Standard and Poor’s calculated the chance that a collateralized debt obligation would fail over a five-year period to be 0.12%, whereas around 28% of them failed. As a result, Silver writes, “trillions of dollars in investments that were rated as being almost completely safe instead turned out to be almost completely unsafe.” The problem was an inappropriate assumption of independence, one that could have been foreseen, had the matter been carefully considered.

Tukey is critical of talk of “checking the assumptions,” saying that he suspects much of it is being used as an excuse for “looking to see what is happening,” something that he applauds. He also gives four routes to understanding when a methodology functions well, and how well it functions, saying, “We dare not neglect any of them.” On the other hand, Silver gives examples from weather forecasting, earthquake prediction, epidemic and climate modelling where assumptions have a big impact on the predictions. Where does that leave us?

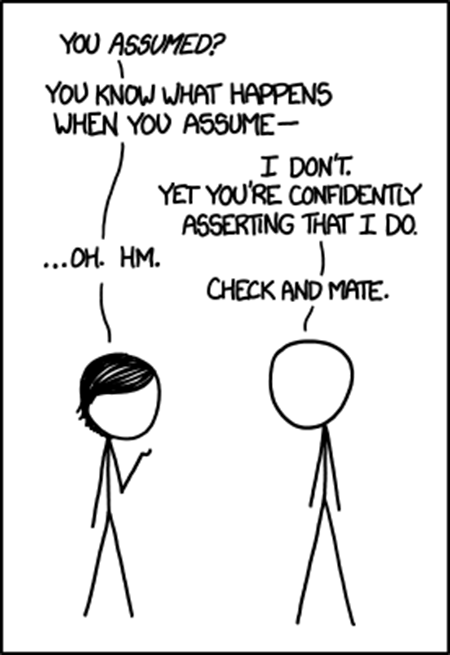

I think we all know that (with Silver) sometimes particular assumptions matter a lot, and that (with Tukey) sometimes they don’t matter much at all. Learning how to distinguish these times can be challenging, but Tukey’s four routes certainly help. I get irritated when I explain what I did in some statistical analysis, and a listener says, “I see, you are assuming blah blah….” I reply tartly “No, I am not assuming blah blah… I am just doing what I did. Here’s why I thought it might help, and here’s why it did help.”

I think behind Tukey’s observations was an impatience with the mindless drive to automate what he called “routes to sanctification and certain truth.” An emphasis on enumerating assumptions and their checking certainly looks like that. He gives the example of spectrum analysis, something usually associated only with stationary processes, as having its “greatest triumphs” with a non-stationary series. He would have welcomed Silver’s book, and wholeheartedly agreed with his focus on assumptions. To channel Box, “All assumptions are wrong but some are critical.”

3 comments on “Terence’s Stuff: Assumptions”