I like things that come in threes, one of my favourites being the three elements we might consider before a defendant can be regarded as guilty of a crime: did he or she have the means, motive, and opportunity? Or, that a good story has a beginning, middle and end. Or, that one recipe for a good talk is that you tell ’em what you are going to tell ’em, then you tell ’em, then you tell ’em what you told ’em.

I was recently in a discussion about ways of evaluating assays “composed of, or derived from, multiple molecular measurements and interpreted by a fully specified computational model to produce a clinically actionable result.” For example, we might take some cells from a tumor biopsy, measure the expression of many genes in those cells using a microarray or DNA sequencing, and then use a procedure based on that data to predict whether the patients in some class can forgo chemotherapy. (The desirability of avoiding unnecessary chemotherapy hardly needs explaining.)

How do we tell whether this test is worthwhile—that is, whether you or your insurer or your government should pay for it to be carried out on your tumor biopsy?

A point of view with which I have a lot of sympathy is that the three most important evaluation criteria are: (a) analytical validity, (b) clinical validity, and (c) clinical utility. Analytical validity means that your measurement process does a good job measuring what it is supposed to be measuring, and terms such as accuracy, precision, reproducibility, reliability, and robustness get used. More could be said, and much depends on the specifics of the assay, but I think you get the idea. In my example, we’d ask whether we get good gene expression measurements from the samples we are likely to be assaying.

Clinical validity refers to the extent to which the test (measurements plus computation) does a good job predicting the clinical feature of interest, in my example, those who can forgo chemotherapy. Here we’re referring to the real-world performance of a predictor, and terms like sensitivity, specificity, false negative rate, false positive rate, positive predictive value, negative predictive value, accuracy, and receiver operating characteristic curves all get used.

Clinical utility is when the assay adds real value to patient management, when it leads to improved outcomes for the patient, compared with current management without this assay. Demonstrating clinical utility can be tricky, as the result will depend on the nature of the comparator, the extent of the comparative analysis, and other matters. In my example we should ask whether the test result frees further people from chemotherapy, without adverse consequences. We might add: to an extent that justifies its cost.

I hope all of this seems reasonable, and that you see there is plenty of room for discussion and research here. Patients, clinicians and those paying the bills all have an interest in getting it right. I also hope you are wondering why I’m telling you this, or perhaps you have guessed?

Statisticians often write papers in which they propose new ways of addressing problems old or new. In such papers, we typically see that in theory and in the simulated world the novel procedure does what the author claims it should do. I’ll call this a demonstration of analytical validity.

Next comes the question of how well a novel procedure performs in practice, not in theory, but with “real”, not simulated, data. I’ll call this applied validity. Satisfying this criterion requires an entirely different kind of demonstration—not theory, not simulation, but one clearly focused on what our procedure is designed to do, ideally with some “real” data that is accompanied by “truth” or a “gold standard.” More often than not, we need to use a lot ingenuity to address this criterion, for “real” data with “truth” or “gold” can be hard to find. Some of you will have wrestled with this issue, but all too often we get one little “real” data example, which hardly satisfies my next criterion. Of course my name for whether or not this new procedure is a real improvement over what we would have done if we didn’t have it, is applied utility. As with our molecular assays, how well we satisfy this criterion depends on our choice of comparators, and the nature of our comparison. This issue will also be familiar to statisticians.

I think that we statisticians focus too much on demonstrating analytical validity, that we pay relatively little attention to applied validity, and that we typically do a poor job with applied utility. I’d like to see more attention paid to the last two and the issues surrounding them. We should embrace the rule of three for statistical innovation: it works in theory, it works in practice, and it truly adds value.

—

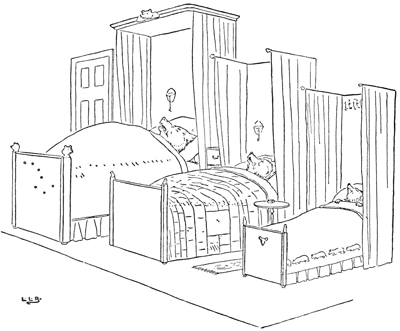

Goldilocks may disagree that good things always come in threes… The three bears, back in their beds after Goldilocks’ visit, as illustrated by Leonard Brooke in the 1900 edition of The Story of the Three Bears.

Comments on “Terence’s Stuff: Omne Trium Perfectum”